August 30, 2018 1:06 PM PDT

First there is no real fix.

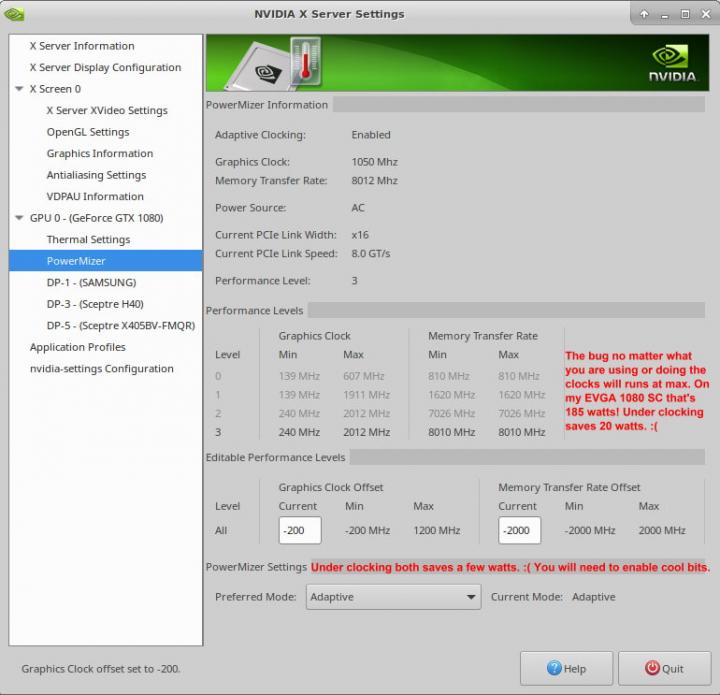

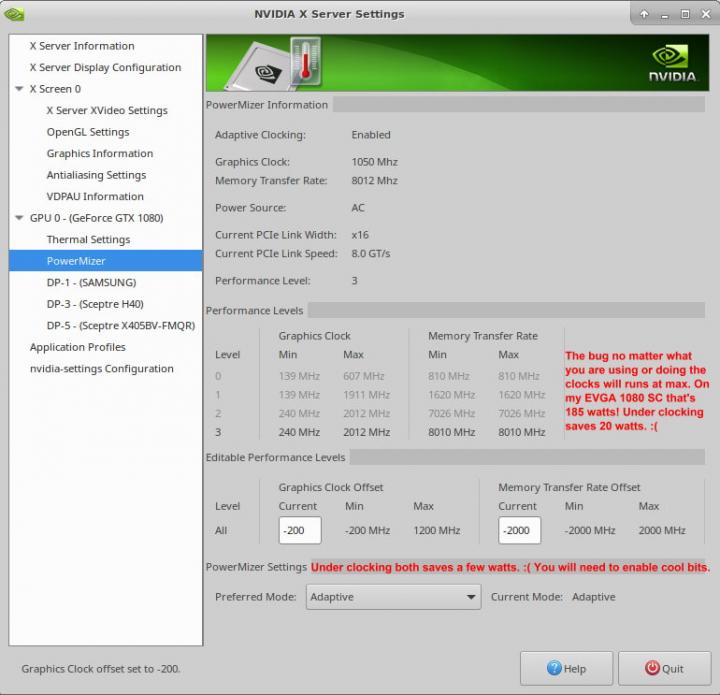

This is a bug in the Nvidia drivers and has been for awhile. Some older drivers work fine all the drivers work on 2 monitors at any resolution or refresh rate. Most Nvidia cards support three monitors but once you enable the third monitor your power use can jump up to 185 watts on the card! Because the card will run at top speed all the time!

Who could / can fix this problem?

Well NVIDIA could FIX their broken Linux drivers. The makers of each card could also fix the problem. Like EVGA making a version of Precision X FOR LINUX! When you have THOUSANDS of customers buying video cards from $400 to $1,400+. The least any company could do is support the OS their customers use. But I understand why they don't support a totally FREE Operating System that's works great, runs everything 99.99% of the end users would ever need!

The most common EXCUSE I hear from most companies "there are so many versions of Linux". OK this is true Linux has a version for every user. BUT all the versions have very common area's! So MAKE one .DEB and one .RPM THAT WORK (This is just not for drivers but all software). And as always the users will fix them, make the LINUX drivers/software OPEN SOURCE and let the Linux community fix them if you can't hire a Linux programmer!

OK on to some work arounds without needing a bunch of terminal commands.

Option 1.

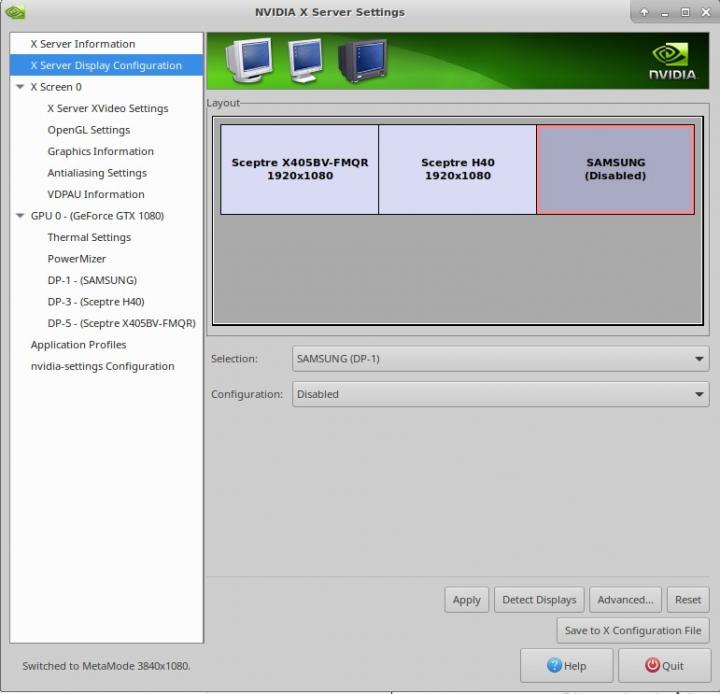

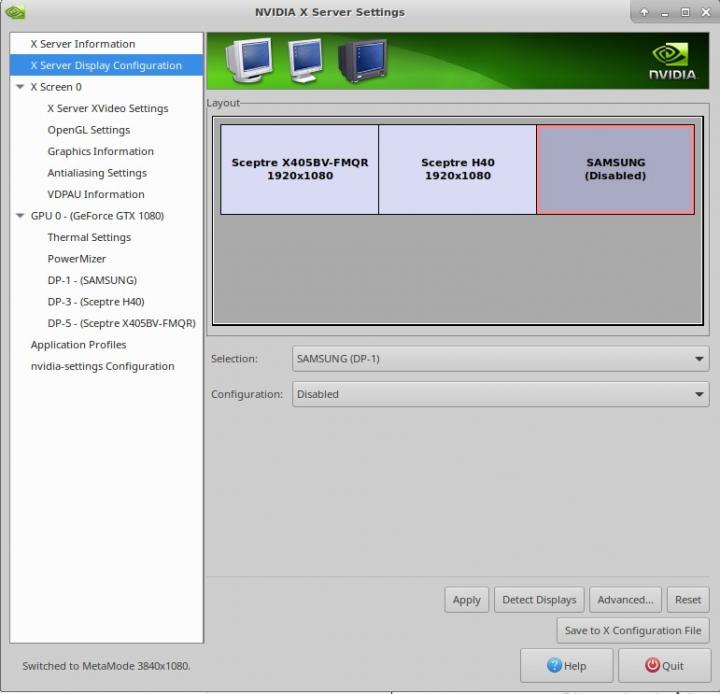

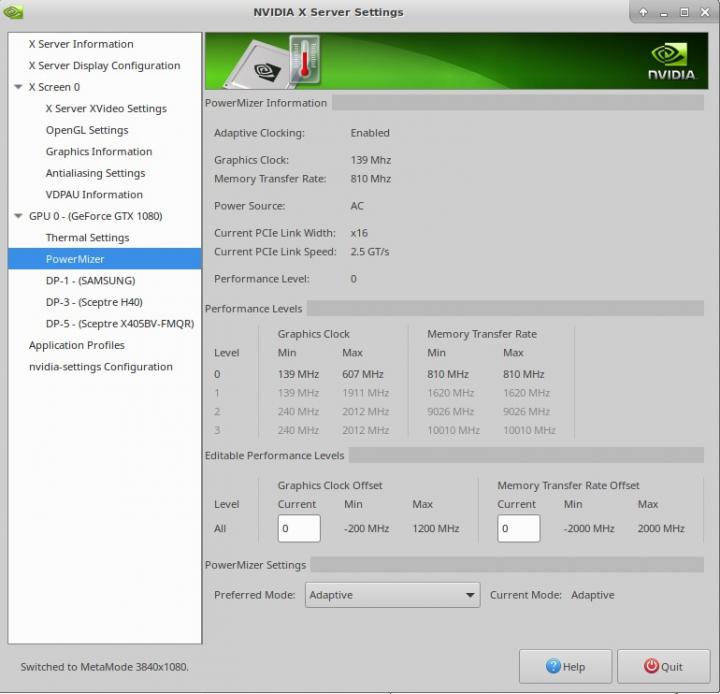

Disable the third monitor that will drop you right to Level 0 - the lowest Graphic's Clock and Memory Transfer Rate.

Only enable the third monitor when you need it. LOL Yeah YOU ALWAYS NEED IT! I know why have three monitors

when you are forced to use only use two or burn up your hardware doing nothing!

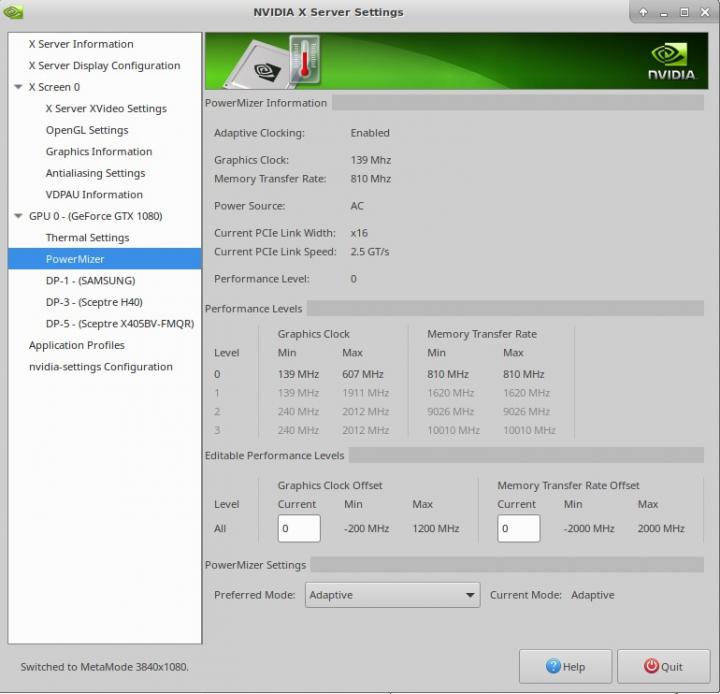

Once you enable the third monitor even doing nothing! You can underclock the card/s to save a little.

You will need to install/enable coolbits.

Linux enabling coolbits

After you have coolbits installed you won't get down to 30 or 40 watts that you should be using at idle.

This option saves you about 20 watts and you don't need to do anything else. But if 165 watts doing

NOTHING is still to high go to Option #2.

Option 2 with the third monitor enabled.

These are hit and miss options that will lower the power bleed but still not get you to the lowest settings.

But they are better then running your fans at 65% just to cool your card doing nothing!

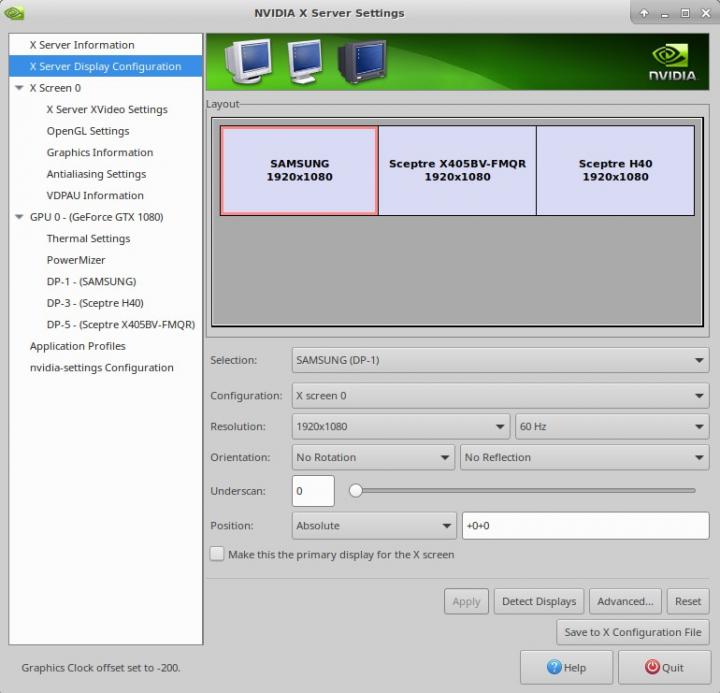

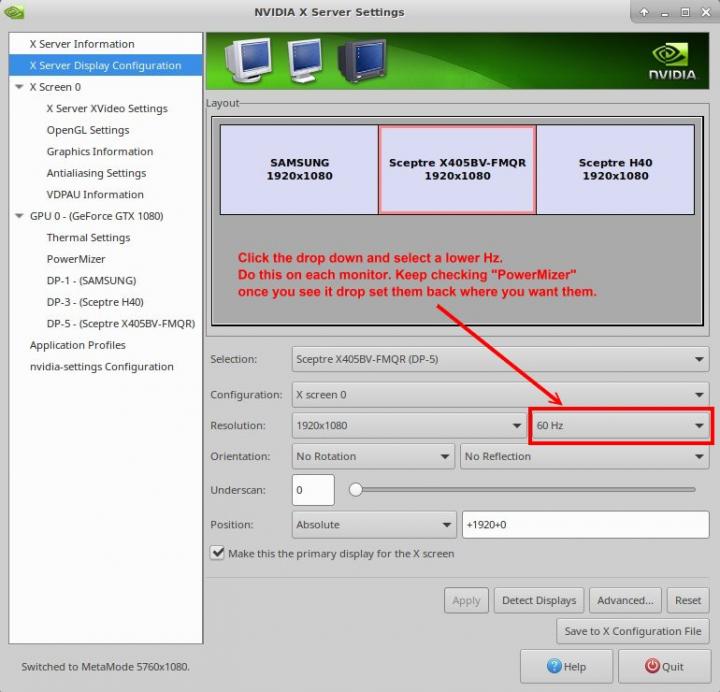

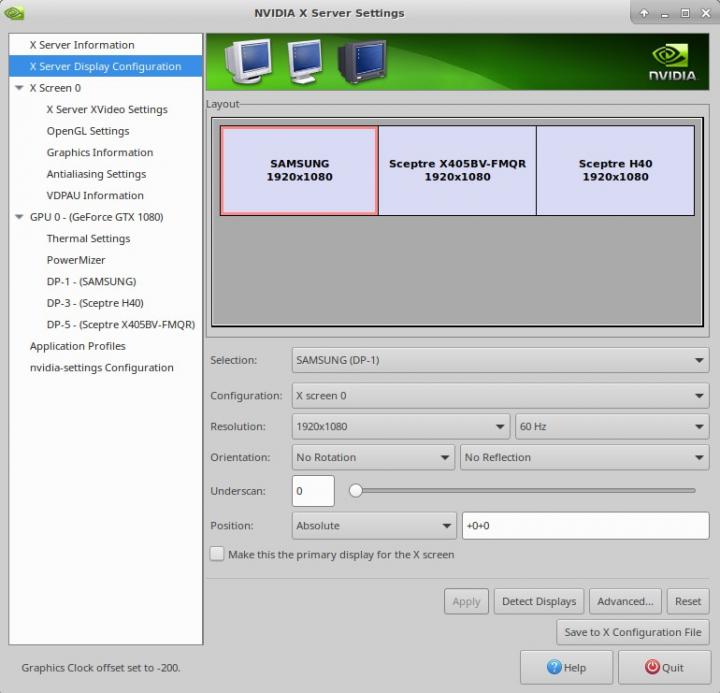

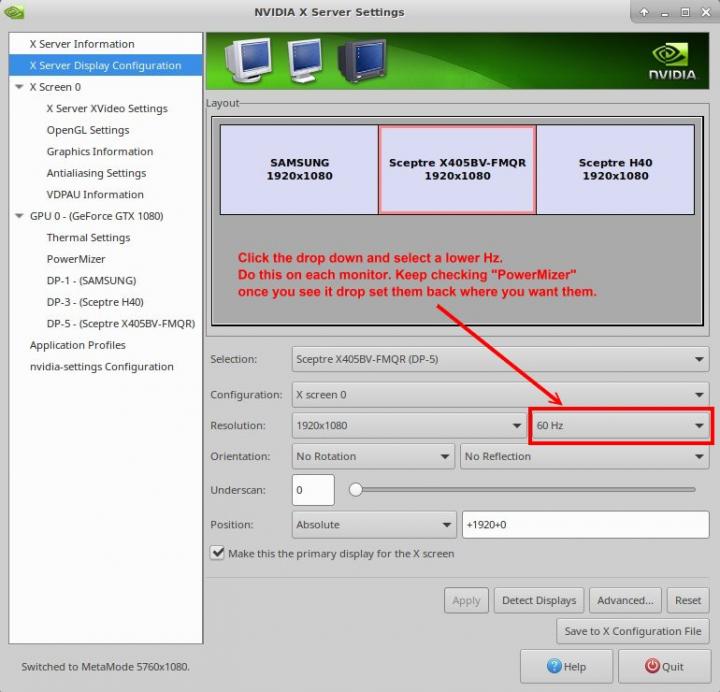

You can drop your card from 165/185 watts to about 65/85 watts. Open the Nvidia X server settings

Start changing the HZ on your monitors 24, 30, 50, 60, 120. What works for me and yes I need to do it

each time I start Xubuntu.

Samsung 60 Hz, center Sceptre 24 Hz, right Sceptre 50 hz.

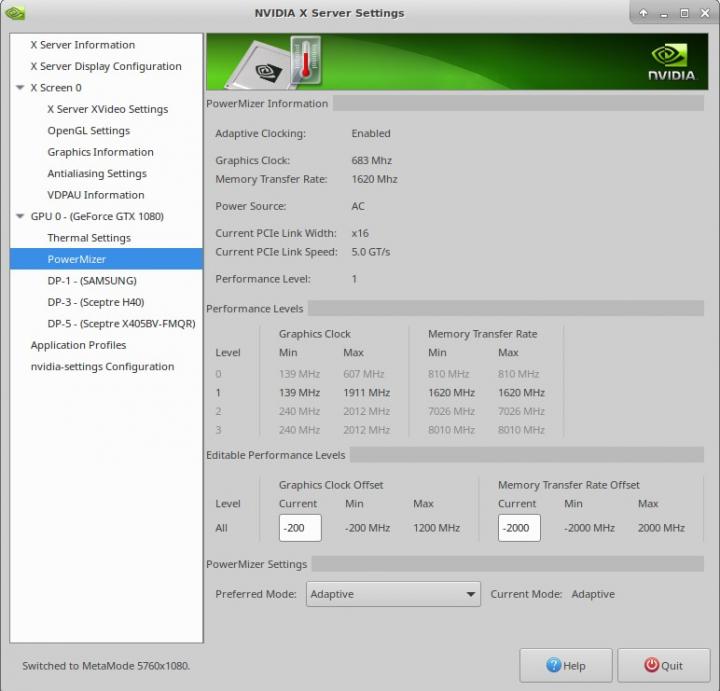

This is ONLY to drop the power use. Once it's dropped you can change them all back and it will stay

at Level 1. So yes it's a BUG and YES it's been broken for over three years! And NO it won't make

Linux users want to use crap Windows!

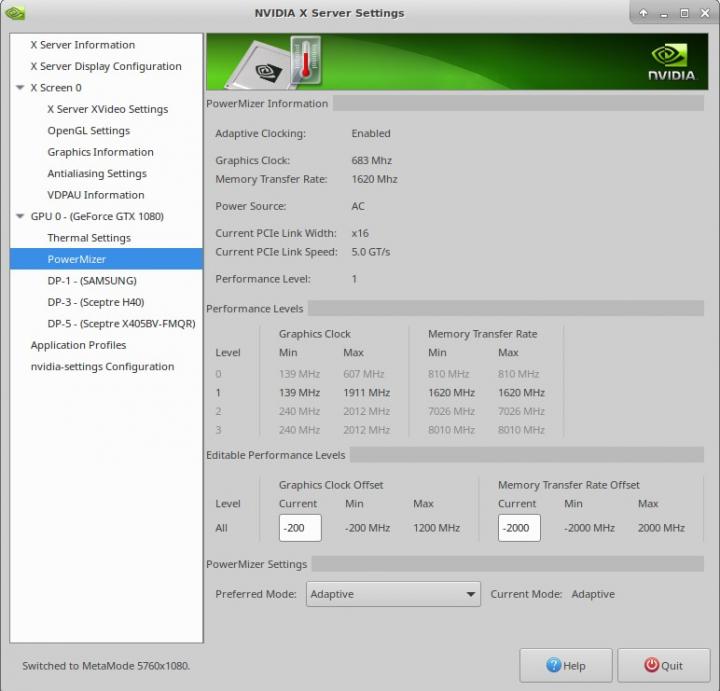

Then I go to PowerMizer and it's dropped to Level 1 still burning power I'M NOT USING!

But 65 - 85 watts is better then 185 watts! (On my EVGA 1080 SC2)

It is a pain but until EVGA makes EVGA Precision X OC for Linux or Nvidia makes good drivers or they

both just make their stuff OPEN SOURCE! The only other thing to do is use Winblows so if you don't mind

a super slow and SUPER annoying OS (WIN 10) use Winblows. This is still MUCH faster then trying to

secure or stop the DATA-MINING in Win 8.1 & 10.

As always any questions just ask and I will try to help.

This post was edited by beastusa

This post was edited by beastusa at August 30, 2018 1:36 PM PDT

at August 30, 2018 1:36 PM PDT

This is a bug in the Nvidia drivers and has been for awhile. Some older drivers work fine all the drivers work on 2 monitors at any resolution or refresh rate. Most Nvidia cards support three monitors but once you enable the third monitor your power use can jump up to 185 watts on the card! Because the card will run at top speed all the time!

This is a bug in the Nvidia drivers and has been for awhile. Some older drivers work fine all the drivers work on 2 monitors at any resolution or refresh rate. Most Nvidia cards support three monitors but once you enable the third monitor your power use can jump up to 185 watts on the card! Because the card will run at top speed all the time!

Samsung 60 Hz, center Sceptre 24 Hz, right Sceptre 50 hz.

Samsung 60 Hz, center Sceptre 24 Hz, right Sceptre 50 hz.

at August 30, 2018 1:36 PM PDT

at August 30, 2018 1:36 PM PDT